Are you currently working in the UK public sector and finding yourself immersed in the ever-changing world of AI, particularly generative AI? If so, you're certainly not alone. The

Cabinet Office and Central Digital and Data Office have recently introduced an all-encompassing Framework that is set to define the way AI is utilised in the public sector.

Delving into the latest AI framework introduced by the UK government, we find the playbook for public sector entities, particularly when it comes to safeguarding data, preserving privacy, and strengthening defenses against cyber risks. This Framework is a well-thought-out guide which provides a real roadmap for those venturing into the realm of AI.

Let's talk numbers. According to a recent survey, over 70% of public sector leaders see AI as a tool to improve services. But, the same survey reveals that nearly 60% are concerned about the ethical implications. That’s why this framework is so timely. It addresses these concerns head-on, providing a roadmap for balancing innovation with responsibility.

In this article, we will focus on the aspects which aim to help organisations ensure data security, uphold privacy, and establish strong governance whilst developing and/or using AI.

Overview

This framework is more than just a collection of rules; it serves as a guiding light for the responsible and effective utilisation of generative AI. Within the framework, there are ten essential principles that act as stepping stones towards a government that uses AI in an ethical, secure, and efficient manner.

The ten principles are designed to guide and improve the implementation of generative AI in the UK public sector. Their purpose is to ensure that AI technologies are used in a way that is ethical, lawful, secure, and effective. They provide a framework for understanding AI's capabilities and limitations, ensuring human oversight, and managing the entire lifecycle of AI systems. These principles also emphasise collaboration, skill development, and alignment with organisational policies, ensuring that AI initiatives are well-integrated and beneficial for public services.

We must acknowledge that AI is a complex field that goes beyond coding and algorithms. It requires a in-depth understanding of its limitations and the impact it can have. The framework stresses the importance of gaining a comprehensive understanding of AI technologies. We are witnessing a shift from a solely technical perspective to a more holistic approach, where ethical and legal considerations take centre stage.

Who should read the Framework?

The Framework is primarily targeted at UK public sector organisations and professionals involved in the implementation and management of AI technologies. It aims to provide a comprehensive framework for the responsible and effective use of generative AI, addressing various aspects including legal, ethical, security, and governance issues. This makes it particularly relevant for decision-makers, IT professionals, and policy developers within the government who are looking to integrate AI into their operations and services.

Ten core principles

This framework builds on the five principles to guide and inform AI development in all sectors, which a pro-innovation approach to AI regulation, sets out.

- Understand AI and its limitations: Acknowledging the capabilities and constraints of AI technology.

- Lawful and Ethical use: Ensuring AI is used within legal and ethical boundaries.

- Security: Prioritising the security of AI systems to protect data and operations.

- Human Control: Maintaining human oversight and control over AI systems.

- Lifecycle Management: Managing AI systems effectively throughout their lifecycle.

- Choosing the Right Tools: Selecting appropriate AI tools and technologies.

- Collaboration: Encouraging collaboration in the development and use of AI.

- Commercial Involvement: Involving commercial entities responsibly in AI initiatives.

- Skills Development: Developing necessary skills for effective AI use.

- Aligning with Organisational Policies: Ensuring AI aligns with the broader policies and goals of the organisation.

In this Article we will focus on focusing on data protection, privacy and security (Principles 2,3 and 4).

Use cases to avoid

Firstly, we take a look at several use cases which the Framework suggests should be avoided. Given the current limitations of generative AI, there are many use cases where its use is not yet appropriate, and which should be avoided.- Fully automated decision-making: any use cases involving significant decisions, such as those involving someone’s health or safety, should not be made by generative AI alone.

- High-risk / high-impact applications: generative AI should not be used on its own in high-risk areas which could cause harm to someone’s health, safety, fundamental rights, or to the environment.

- Low-latency applications: generative AI operates relatively slowly compared to other computer systems and should not be used in use cases where an extremely rapid, low-latency response is required.

- High-accuracy results: generative AI is optimised for plausibility rather than accuracy and should not be relied on as a sole source of truth, without additional measures to ensure accuracy.

- High-explainability contexts: like other solutions based on neural networks, the inner workings of a generative AI solution may be difficult or impossible to explain, meaning that it should not be used where it is essential to explain every step in a decision.

Given the plethora of fundamental use cases already identified for Gen AI, it is prudent to dismiss the above peripheral cases as the potential risk likely surpasses the value they offer.

Data protection and privacy

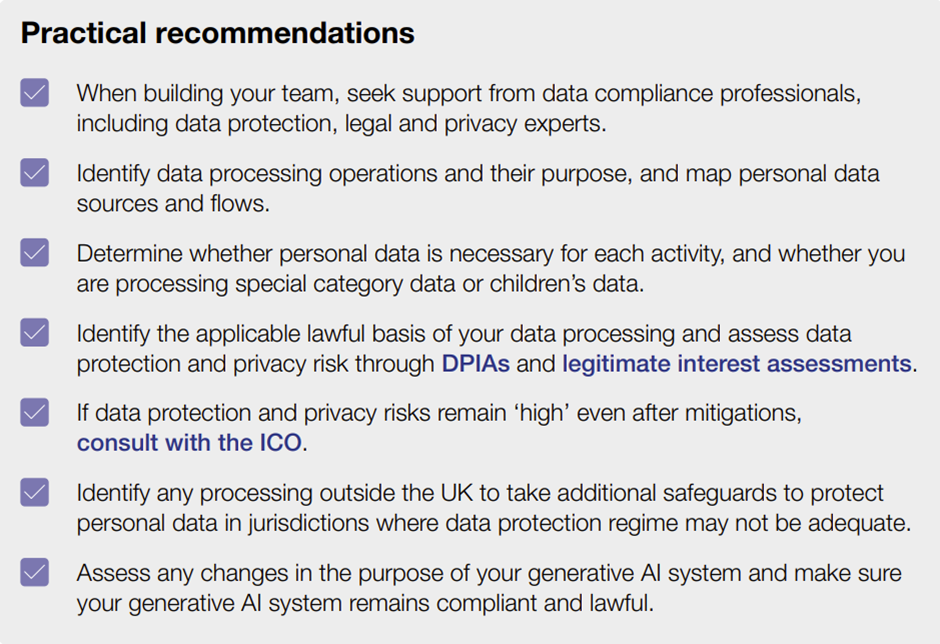

Generative AI systems have the ability to handle personal data during their training and testing phases. Additionally, they can potentially generate outputs that may contain personal data, including sensitive information. When utilising generative AI, it is crucial to prioritise the protection of personal data, ensure compliance with data protection laws, and minimise the risk of privacy breaches from the very beginning.

Organisations involved in the development and implementation of generative AI systems must prioritise the principles of data protection as outlined in the UK General Data Protection Regulation (UK GDPR) and the Data Protection Act 2018. It is crucial to adhere to these regulations to safeguard personal data and ensure compliance with ethical data handling practices when utilising generative AI technology.

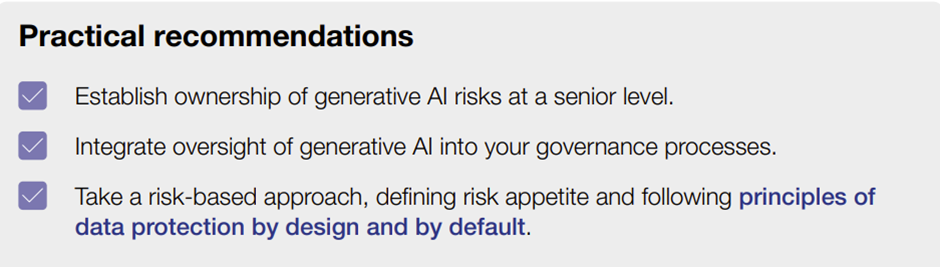

The Data Protection and Privacy section of the Framework emphasises key aspects which must be considered, such as accountability, lawfulness, purpose limitation, transparency, individual rights, fairness, data minimisation, storage limitation, human oversight, and accuracy. It highlights the importance of complying with data protection legislation and the need for ethical considerations in handling personal data when using generative AI. The key takeaway here is the imperative of managing and protecting data privacy throughout the AI lifecycle, ensuring responsible and lawful usage of AI technologies in the public sector.

tmc3 have developed a AI Impact Assessment which serves as a valuable tool for evaluating the potential risks posed to individuals during the creation and utilisation of a designated artificial intelligence (AI) system. Download your copy

Figure 1 - Data Protection and Privacy Recommendations

Security

The UK Government has a responsibility to ensure that the services it provides do not

expose the public to undue risk, which makes security a primary concern for anyone

looking to deploy emerging technology, such as generative AI.

Naturally, with AI's capabilities growing, so do the risks. This Framework doesn't shy away from identifying that. It talks about ensuring security throughout the AI lifecycle, a crucial aspect often overlooked in the rush to innovate.

There are various potential threats and vulnerabilities associated with generative AI in the public sector. Examples include:

- prompt injection threats: using prompts that can make the generative AI model behave in unexpected ways;

- data leakage: responses from the LLM reveal sensitive information, for

example, personal data; - hallucinations: the LLM responds with information that appears to be truthful but is

actually false.

The Framework takes each security risk and uses a scenario to illustrate how that vulnerability might be exploited by an application of generative AI in a government context. The list of scenarios is not exhaustive but should be used as a template for assessing the risks associated with a particular application of generative AI.

To combat risks, key considerations include the need for comprehensive upfront risk assessment, understanding of the specific security challenges posed by AI technologies, and the importance of implementing robust security measures. This is not a point in time action; there is a need to stay vigilant against evolving cyber threats to ensure that AI systems are safeguarded throughout their lifecycle to protect sensitive government data and operations.

The Framework mandates the need for a comprehensive security strategy that encompasses all stages of the AI lifecycle, from development to deployment and beyond. Strategies should be aligned to the secure by design principles. This also requires continuous monitoring and updating of security protocols to effectively address emerging risks and uphold the integrity and confidentiality of AI systems.

To help stakeholders across government to share knowledge and best practices, a cross-government generative AI security group has been established. The group is made up of security practitioners, data scientists and AI experts to support this section. If you work in this area, you are able to request to join the group by emailing: x-gov-genai-security-group@digital.cabinet-office.gov.uk

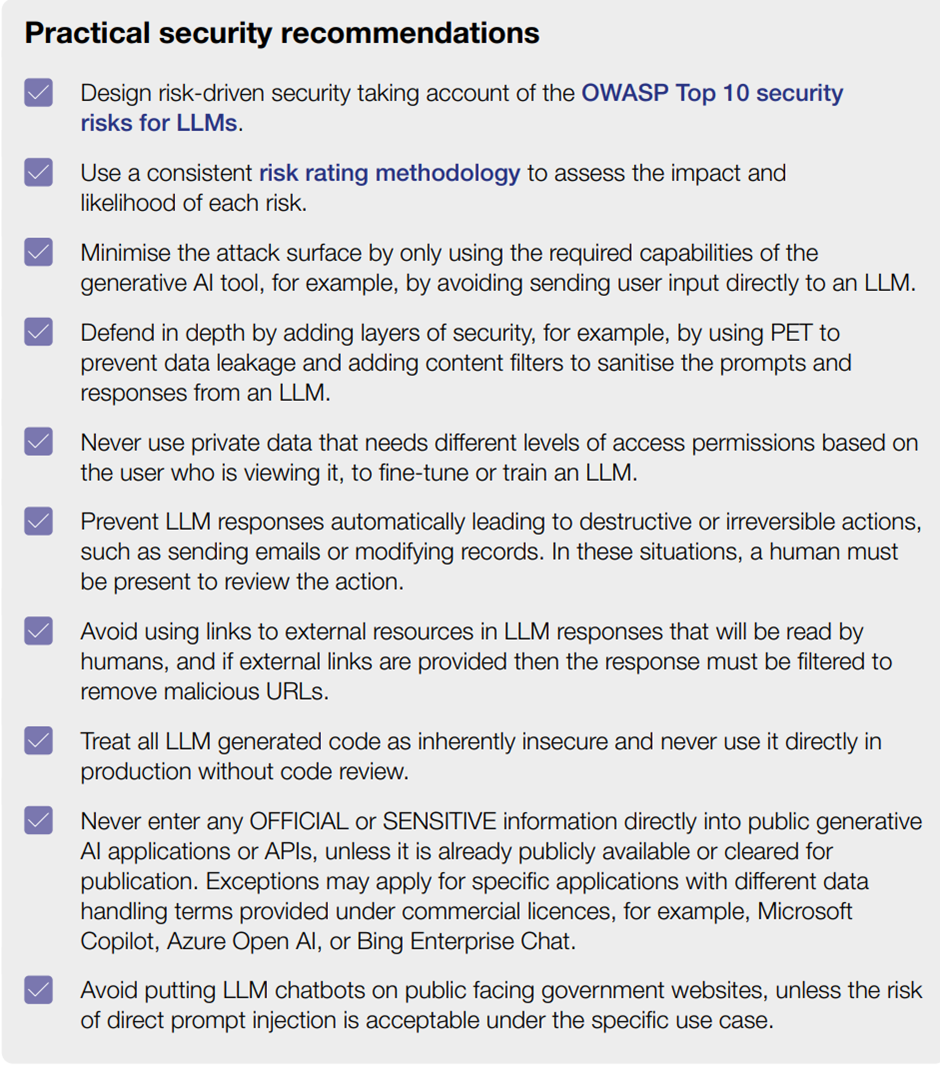

Practical security recommendations offer actionable advice for safeguarding generative AI systems. Key recommendations include implementing robust cyber security measures, conducting thorough risk assessments, and regularly updating security protocols. The Framework suggests fostering a culture of security awareness and preparedness among staff and stakeholders - something which should already be part of any security programme. These measures aim to ensure the ongoing protection and integrity of AI systems within government operations.

Figure 2 - Security Recommendations

Governance

Given the potential risks associated with security, bias, and data in AI programmes, it is essential to implement robust governance processes. Whether these processes are already integrated into existing governance frameworks or require the establishment of a new framework, they should be centered around continuous improvement, incorporating new knowledge, methods, and technologies:

- identifying key stakeholders representing different organisations and interests such

as Civil Society Organisations and sector experts to create a balanced view from

stakeholders so that they can support AI initiatives. - planning for the long-term sustainability of AI initiatives, considering scalability, long-term support, maintenance, and future developments.

Governance is key to establishing effective management and oversight of generative AI technologies. It is important to set out clear leadership and accountability for AI initiatives, developing comprehensive governance structures, and ensuring alignment with broader organisational policies and goals. There is a fundamental need for transparent decision-making processes, regular review and auditing of AI systems, and the integration of ethical considerations into governance frameworks.

And, of course, there's a focus on the human element. AI is powerful, but it shouldn't operate in a vacuum. The framework advocates for human oversight and control, ensuring that AI tools are a complement, not a replacement, for human decision-making.

Final thoughts

This framework is more than a set of guidelines; it's a catalyst for change. It invites UK public sector organisations to embrace AI, but with caution, responsibility, and a focus on the greater good.

Public sector leaders and professionals should embrace this framework as their guide in the journey of integrating generative AI into operations. Dive deep into understanding its principles, focusing on the ethical use, data protection, and security aspects. Collaborate, innovate, and lead in this AI-driven era, but do so with responsibility and foresight. Actions today will shape the future of AI in public services, making them more efficient, accessible, and trustworthy.

%20(1).png?width=290&name=Team%20(5)%20(1).png)

COMMENTS