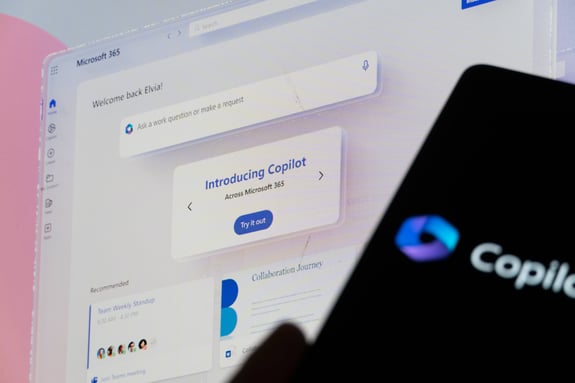

Microsoft Copilot has recently undergone a rebranding and repositioning phase. Previously known as Bing Chat Enterprise, it is now simply known as Copilot.

One of the challenges with this change is it is not always clear what security and data protection controls each component has and what level of control you have or how organisations can restrict its usage. In this article, we will delve into the recent changes surrounding Microsoft Copilot and highlight what organisations need to be aware of in order to protect themselves from potential risks.

Understanding what Copilot is

One of the first things to know about Copilot is it is not just Microsoft Copilot, there are other companies owned by Microsoft that have embedded the technology, for example GitHub Copilot.

Most people will have heard of Copilot but for those who are not aware it is the branding that Microsoft have around their AI technology. Currently majority powered by the Large Language Models (LLMs) created by OpenAI (Microsoft has a 49% stake in OpenAI and they use the Microsoft Azure platform).

Due to the recent shake up of the use of the brand Copilot, in this article, we will describe some of the most common instances below. However, to add potential for future confusion, at the recent Ignite conference, Microsoft announced Microsoft Copilot Studio. This will allow end users to use the Copilot engine to create bespoke Bots and will also likely expand in future to other use cases.

To provide clarity amidst the evolving naming system, we will use the familiar older names while also referencing the new names. This will hopefully help you to easily understand and navigate the services in question.

Copilot for Windows

Firstly, we have what is most commonly known as Copilot for Windows. This is currently available for Windows 11 and is likely to be available for Windows 10 in the new year. This is a slightly complicated product as it changes depending on if the device is signed into a Work or School account. If you are, it also starts to use the system known previously as Bing Chat Enterprise (see further details below). If you use a personal Account, it operates standalone and allows you to interact with Windows in a more human friendly way. For example, instead of going to the start menu and having to find dark mode you can simply type “turn on dark mode”. However, be aware, it can also interact using Bing Chat (not enterprise) which has very few security controls and the data does not remain within your Microsoft Tenancy - something which can be disabled with a GPO setting change.

Bing Chat Enterprise

Next up, and probably the most useful for most organisations is what was previously called Bing Chat Enterprise. This is usually accessed via the sidebar menu within edge. This creates a chat prompt very similar to ChatGPT (the interface used to interface with OpenAI LLM’s). It facilitates seamless conversations, leveraging Copilot's extensive knowledge that now incorporates web data, surpassing the limitations of previous systems which had 'point in time' data constraints.

Many organisations are allowing the use of this solution due to its comparable data controls to the existing 365 tenancy. Although the data does leave your tenancy, it is securely managed within Microsoft's controlled Data Centre, which remains inaccessible to OpenAI. However, it is crucial to note that these data centres can be located in the USA, regardless of the location of your 365 tenancy. Something which needs to be considered if data sovereignty or the UK GDPR is in scope for the types of data you intend to use.

Bing Chat Enterprise does not inherently have any access to the devices or tenancy data. However, it does have access to the data, if it is copied into the chat window or if using Edge to review the content of the page when a document is open.

From a licence perspective, Bing Chat Enterprise is included in E3, E5, A3 and A5 licences as well as Business Standard and Business Premium. It is also enabled by default. The process to disable is a little complicated, effectively disabling Bing Chat Enterprise results in it becoming the consumer version, which has no controls. To effectively block it, changes are required to be made to some DNS. However, we urge caution with this approach as it could potentially result in organisations inadvertently creating a less controlled environment without awareness.

You can easily identify that you are utilising Bing Chat Enterprise by the presence of these distinctive cogs.

![]()

Fig 1 - Bing Chat Enterprise

Copilot for Microsoft 365

The third tool which is most often referred to as Copilot for Microsoft 365 is a separately licenced option. Currently it is priced at 30 USD per user per month and only available for E3 or E5 customers. This is the most embedded version by default, effectively granting it access to your 365 tenancy (based on the user permissions of the user that is using Copilot). This effectively allows the queries and actions to utilise the data in order to interact with it. Some common examples include summarising a meeting or providing insights based on a collection of documents.

The privacy and security of this is very similar to Bing Chat Enterprise with a few exceptions. It does limit EU traffic to remain with the EU, with further region specific enhancements coming down the line. It also respects existing file permissions and sensitivity labels.

Closing thoughts

Copilot represents a significant shift in Microsoft's approach to AI technology integration. This rebranding reflects the broader embedding of AI capabilities across Microsoft's suite of tools, impacting both individual and organisational users. While it offers enhanced functionality and convenience, it also necessitates a thorough understanding of its security, data protection controls, and licensing intricacies to maximise its benefits while mitigating potential risks.

With the integration of Copilot into various applications, understanding the security and data protection controls of each component is crucial. Organisations must be particularly vigilant about where and how data is stored and managed.

To discuss how AI and other emerging technologies can impact your organisations security and how to get the most value from them in a governed way, reach out today.

%20(1)-1.png?width=290&name=Team%20(3)%20(1)-1.png)

COMMENTS